文献の詳細

| 論文の言語 | 英語 |

|---|---|

| 著者 | Mizuki Matsubara, Joachim Folz, Takumi Toyama, Marcus Liwicki, Andreas Dengel, Koichi Kise |

| 論文名 | Extraction of Read Text for Automatic Video Annotation |

| 論文誌名 | UbiComp/ISWC'15 Adjunct Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers |

| ページ | pp.849-856 |

| 発表場所 | Osaka, Japan |

| 査読の有無 | 有 |

| 発表の種類 | 口頭発表 |

| 年月 | 2015年9月 |

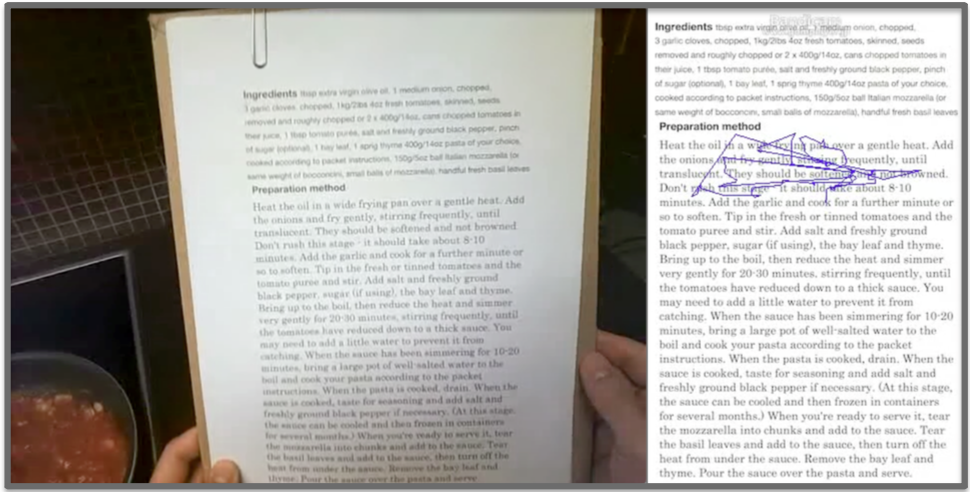

| 要約 | This paper presents an automatic video annotation method which utilizes the user’s reading behaviour. Using a wearable eye tracker, we identify the video frames where the user reads a text document and extract the sentences that have been read by him or her. The extracted sentences are used to annotate video segments which are taken from the user’s egocentric perspective. An advantage of the proposed method is that we do not require training data, which is often used by a video annotation method. We examined the accuracy of the proposed annotation method with a pilot study where the experiment participants drew an illustration reading a tutorial. The method achieved 64:5% recall and 30:8% precision. |

| DOI | 10.1145/2800835.2804333 |

- 次のファイルが利用可能です.

- BibTeX用エントリー

@InProceedings{Matsubara2015, author = {Mizuki Matsubara and Joachim Folz and Takumi Toyama and Marcus Liwicki and Andreas Dengel and Koichi Kise}, title = {Extraction of Read Text for Automatic Video Annotation}, booktitle = {UbiComp/ISWC'15 Adjunct Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers}, year = 2015, month = sep, pages = {849--856}, DOI = {10.1145/2800835.2804333}, location = {Osaka, Japan} }